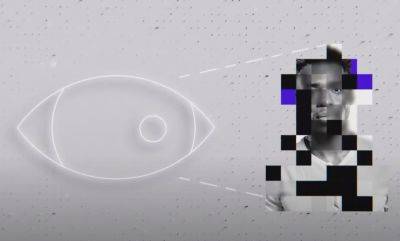

Meta makes progress towards AI system that decodes images from brain activity

The new system combines a non-invasive brain scanning method called magnetoencephalography (MEG) with an artificial intelligence system.

This work leverages the company’s previous work decoding letters, words, and audio spectrograms from intracranial recordings.

According to a Meta blog post,

A post from the AI at Meta account on X, formerly Twitter, showcased the real-time capabilities of the model through a demonstration depicting what an individual was looking at and how the AI decoded their MEG-generated brain scans.

Today we're sharing new research that brings us one step closer to real-time decoding of image perception from brain activity.

Using MEG, this AI system can decode the unfolding of visual representations in the brain with an unprecedented temporal resolution.

More details ⬇️

It’s worth noting that, despite the progress shown, this experimental AI system requires pre-training on an individual’s brainwaves. In essence, rather than training an AI system to read minds, the developers train the system to interpret specific brain waves as specific images. There’s no indication that this system could produce imagery for thoughts unrelated to pictures the model was trained on.

However, Meta AI also notes that this is early work and that further progress is expected. As such, the team has specifically noted that this research is part of the company’s ongoing initiative to unravel the mysteries of the brain.

Related: Neuralink gets FDA approval for ‘in-human’ trials of its brain-computer interface

And, while there’s no current reason to believe a system such as this would be capable of invading someone’s privacy, under the current technological limitations, there is reason to believe that it could provide a

Read more on cointelegraph.com